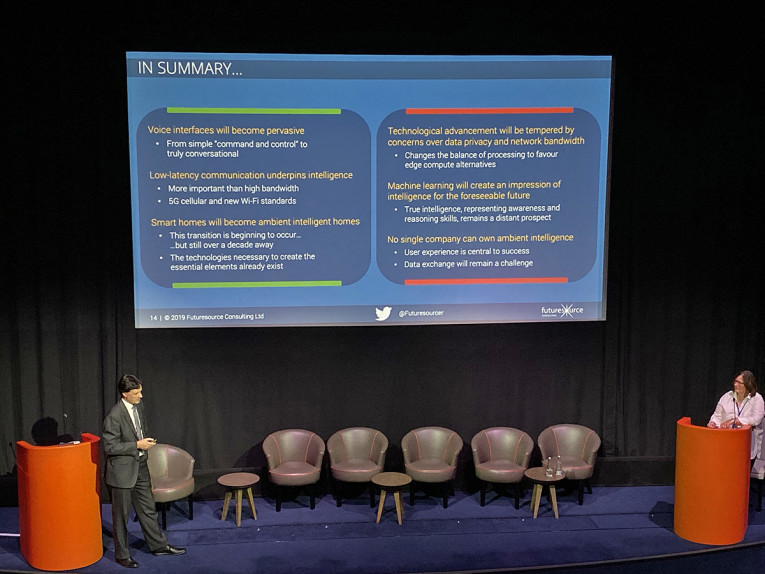

One of the first presentations at this event was from Simon Forrest, principal analyst at Futuresource Consulting, who focused on the Smart Home Technology Evolution and specifically addressed the topic of voice recognition, voice control, and voice assistants in that domain. I remember tweeting about "reality setting-in on voice potential" after this presentation. Effectively, Simon Forrest summarized all main technology trends in the space, calling things for what they are, providing a realistic perspective on achievements, technology evolution, challenges, and actual market opportunities.

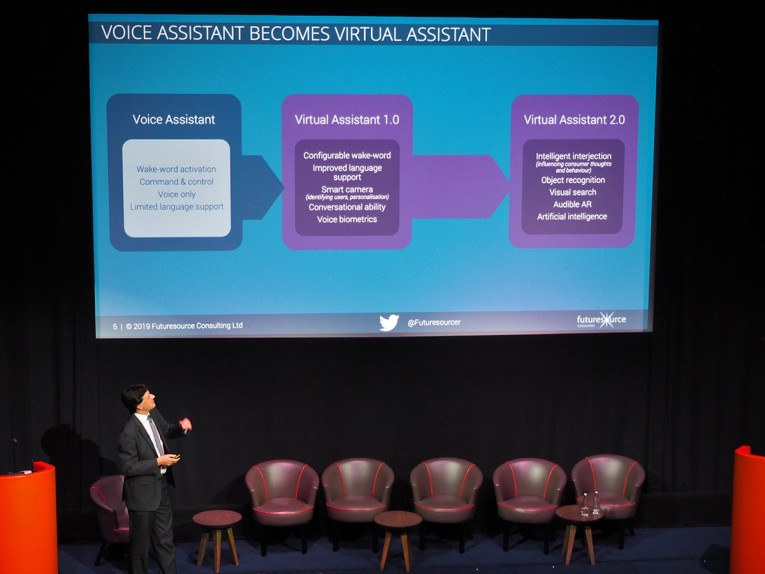

Like most of the audience and many of those involved in the development of voice recognition - I believe - I strongly agree with Simon Forrest's view that we still have a long way to go to turn today's voice command and control interactions into real "virtual assistants." As the presentation detailed, evolving toward configurable wake words, improved language support, and implementing the ability to identify users through face recognition (smart camera) and voice biometrics. Eventually, perfecting conversational abilities that Forrest called, Virtual Assistants 1.0.

His view of the evolution in this field - Virtual Assistant 2.0 - described the ability of artificial intelligence engines to actually power features such as context awareness in conversations or object recognition in image analysis, paving the way to visual search and audible AR. Ultimately, even adding intelligent interjection (influencing consumer thoughts and behavior). Evolving toward a single VA solution with a fluid user experience across systems, and edge-based cognitive reasoning. Futuresource's timeline for this evolution? Five to 10 years.

As Wetherill highlighted, there are too many technologies and buzzwords "out there," and this diversified panel of industry insiders offered a nice perspective into what it all means. This is an important topic, because it is increasingly palpable that many of these "enhanced experiences" are already having a meaningful impact in the audio industry - at the consumer and professional application levels. In fact, the consumer electronics industries are actually desperately pushing for something new like this.

Le Nost, bringing the perspective of a professional audio industry leading manufacturer, provided a nice overview of all that's involved when we currently talk about these "enhanced experiences," from playback to live sound technologies. In his perspective, immersive sound for live events took off exponentially, and reflects consumers' legitimate interest and need for enhanced audio experiences.

Le Nost also provided a nice summary of the main immersive audio technologies and the opportunities they convey. Professional audio companies like L-Acoustics have long been trying to "immerse" the spectator with reinforced sound, but only when they started combining spatial audio processing and evolving from channel-based to object-based audio, did it become possible to create a new experience that was sufficiently rewarding to generate excitement both in audiences but also in events, live shows, and tour promoters. The key, as Le Nost described it, was to not only surround everyone with sound but also being able to localize a sound source precisely on stage. "For us, immersion is quite different from localization, and I think that in 3D audio the two terms are still valid for a lot of use cases."

Le Nost also appealed those attending the Audio Collaborative 2019 event, for support of initiatives that will allow users to bridge all channel-, scene- and object-based sound models in the future, such as the existing Audio Definition Model, promoted by the EBU and described in the Recommendation BS.2076, recently approved by the ITU. Some technology providers in the consumer space (e.g., Dolby and DTS) or those involved in the MPEG-H development efforts (e.g., the Fraunhofer institute) have already adopted the ADM metadata model. L-Acoustics is now pushing the professional audio industry to do the same. More important, Le Nost warned that the existing creative tools for immersive sound are still not friendly enough for creators, that more work is needed to make sure that content creation is correctly translated in different platforms, and that a lot more effort is needed on that front.

Strangely, it was Stiles who highlighted how new products such as the Amazon Echo Studio and the Sennheiser AMBEO soundbar are actually bringing that experience closer to the consumer and allowing people to have new enhanced experiences, including interacting with the audio of live sports broadcasting. The fact that streaming services are now supporting and distributing actual content in immersive formats, such as Dolby Atmos or MPEG-H (Sony 360 Reality Audio) is something that the whole panel agreed will contribute to quickly bring scale to the new formats.

But as Wetherill cleverly asked Andreas Ehret from Dolby, while Dolby Atmos is a wonderful experience in the cinema, it is painfully obvious that a small device such as a smartphone or a single wireless speaker, some soundbars and even something like the Amazon Echo Studio, is not able to generate the same positive impact. Is there a risk that the original concept is endangered? And is this something that Dolby worries about? Wetherill asked. Ehret confirmed, "I worry about that every day. But the approach we have been taking is that Atmos cannot be and should not be just a premium, niche experience to those who install dozens of speakers in their home. That doesn't make sense. You wouldn't have people producing in that format if that was all you can address. The approach we've taken is to make sure that we can address the entire market and all those devices. Whenever you are feeding those devices with Atmos, with an original Atmos mix, you still get "an uplift" from what you would have with stereo," he shared. "I think that is a lesson we've learned from surround sound, and at the very early stages of Atmos development it was clear to us that we shouldn't repeat the same mistake."

From the same session, I personally found very interesting the testimony from Paul Gillies that his company Headphone Revolution is having massive success with "entertaining people with headphones," not only with Silent Discos - those are becoming a major attraction at festivals throughout the year - but also with all sorts of parties and events in places that don't have a noise license. Increasingly, Gillies explained, there's applications at corporate events where people can use headphones to choose between multiple types of content. And artists and content creators are aware of the potential of headphones, personalization, and enhanced binaural experiences, and want to explore those for multiple people at the same time. For the moment, the main hurdle for these events is that they need to bring the multiple signals to a lot of headphones at the same time. The next step, according to Headphone Revolution, will be personalization, allowing a real advantage for people to choose listening to headphones during events. Very promising.

During this event's closing session, Carl Hibbert, Head of Consumer Media & Technology at Futuresource, talked with Bill Neighbors, SVP & GM, Cinema and Home, Xperi; and Pete Wood, Senior Vice President, New Media Distribution, Sony Pictures, about a new initiative to bring enhanced experiences to consumers. In this case, involving IMAX Enhanced (www.IMAXenhanced.com) platform and content.

For those not aware, in September 2019, IMAX Corp. and DTS, a wholly-owned subsidiary of Xperi, announced an expansion of the IMAX Enhanced ecosystem spanning new streaming platforms, movies from Sony Pictures Home Entertainment and other studios, and device partners throughout the United States, Europe, and China. IMAX Enhanced is a premier, immersive at-home entertainment experience - combining exclusive, digitally remastered 4K HDR content with high-end consumer electronics to offer a new level of sight, sound, and scale. IMAX Enhanced device partners include Arcam, AudioControl, Denon, Elite, Integra, Lexicon, Marantz, Onkyo, Pioneer, Sony Corp., TCL, Trinnov, and recently Anthem and StormAudio joined as well.

Interestingly, Pete Wood reminded us how in the digital age there's been this reoccurring phenomena of technology moving forward very quickly, forcing content - and in certain measure, services - to catch up. He reminded us of the early days of Netflix, where it was all about convenience versus not-such a great quality experience, or the early days of HDTV broadcasting and the lack of a consistent consumer experience. Wood agrees that consumers have an expectation of high quality and that there's already an association in consumer's minds with the IMAX brand. That was the reason why Sony decided to offer content to match that experience and offer a complete quality-ecosystem from creation to the end-consumer. As Bill Neighbors reinforced, IMAX Enhanced is a platform not a new standard. It actually uses existing standards like HDR10 because it doesn't want to burden the consumer with another standardization process. On the audio front, its uses DTS existing formats. IMAX Enhanced sound needs a 5.1.4 speaker system at the minimum, while optimum results come with the recommended 7.2.4 configuration and reproduction is compatible with the DTS Neural:X upmixer found in many AVRs.

So, for these two Hollywood-minded industry veterans, there's no question that the opportunity is in trying to "enhance" the experience with the best the industry can offer - starting with the best home cinema experiences, and let the consumer vote with their wallets.

This article was originally published in The Audio Voice email weekly newsletter. Sign-up here.