Based on the Intel Movidius Myriad X vision processing unit (VPU) and supported by the Intel Distribution of OpenVINO toolkit, the Intel NCS 2 affordably speeds the development of deep neural networks inference applications while delivering a performance boost over the previous generation neural compute stick. The Intel NCS 2 enables deep neural network testing, tuning and prototyping, so developers can go from prototyping into production leveraging a range of Intel vision accelerator form factors in real-world applications.

“The first-generation Intel Neural Compute Stick sparked an entire community of AI developers into action with a form factor and price that didn’t exist before. We’re excited to see what the community creates next with the strong enhancement to compute power enabled with the new Intel Neural Compute Stick 2,” says Naveen Rao, Intel corporate vice president and general manager of the AI Products Group.

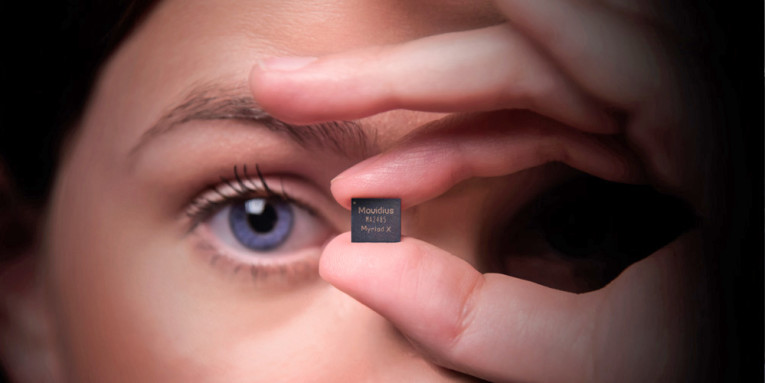

According to Intel, bringing computer vision and AI to Internet of Things (IoT) and edge device prototypes is easy with the enhanced capabilities of the Intel NCS 2. What looks like a standard USB thumb drive hides much more inside. The Intel NCS 2 is powered by the latest generation of Intel VPU – the Intel Movidius Myriad X VPU. This is the first to feature a neural compute engine – a dedicated hardware neural network inference accelerator delivering additional performance. Combined with the Intel Distribution of the OpenVINO toolkit supporting more networks, the Intel NCS 2 offers developers greater prototyping flexibility. Additionally, thanks to the Intel AI: In Production ecosystem, developers can now port their Intel NCS 2 prototypes to other form factors and productize their designs.

The Intel NCS 2 runs on a standard USB 3.0 port and requires no additional hardware, enabling users to seamlessly convert and then deploy PC-trained models to a wide range of devices natively and without internet or cloud connectivity.

The first-generation Intel NCS, launched in July 2017, has fueled a community of tens of thousands of developers, has been featured in more than 700 developer videos and has been utilized in dozens of research papers. Now with greater performance in the NCS 2, Intel is empowering the AI community to create even more ambitious applications.

At the Beijing event Intel also promoted its brand new Cascade Lake, a future Intel Xeon Scalable processor that will introduce Intel Optane DC persistent memory and a set of new AI features called Intel DL Boost. This embedded AI accelerator is expected to speed deep learning inference workloads, with enhanced image recognition compared with current Intel Xeon Scalable processors. Cascade Lake is targeted to ship in 2018 and ramp in 2019.

Intel's Vision Accelerator Design Products targeted at AI inference and analytics performance on edge devices come in two forms: one that features an array of Intel Movidius VPUs and one built on the high-performance Intel Arria 10 FPGA. The accelerator solutions build on the OpenVINO toolkit that provides developers with improved neural network performance on a variety of Intel products and helps them further unlock cost-effective, real-time image analysis and intelligence within their IoT devices.

Spring Crest is the Intel Nervana Neural Network Processor (NNP) that will be available in the market in 2019. The Intel Nervana NNP family leverages compute characteristics specific for AI deep learning, such as dense matrix multiplies and custom interconnects for parallelism.

www.intel.com